Recently I started investigating relative rotation graphs with a view to perhaps implementing a version of this for use on forex currency pairs. The underlying idea of a relative rotation graph is to plot an asset's relative strength compared to a benchmark and the momentum of this relative strength and to plot this in a form similar to a Polar coordinate system plot, which rotates around a zero point representing zero relative strength and zero relative strength momentum. After some thought it occurred to me that rather than using relative strength against a benchmark I could use the underlying relative strengths of the individual currencies, as calculated by my currency strength indicator, against each other. Furthermore, these underlying log changes in the individual currencies can be normalised using the ideas of brownian motion and then averaged together over different look back periods to create a unique indicator.

indicator_middle_layer = tanh( full_feature_matrix * input_weights ) ;

indicator_nn_output = tanh( indicator_middle_layer * output_weights ) ;

Of course, prior to calling these two lines of code, there is some feature engineering to create the input full_feature_matrix, and the input weights and output_weights matrices taken together are mathematically equivalent to the original indicator calculations. Finally, because this is a neural net expression of the indicator, the non-linear tanh activation function is applied to the hidden middle and output layers of the net.

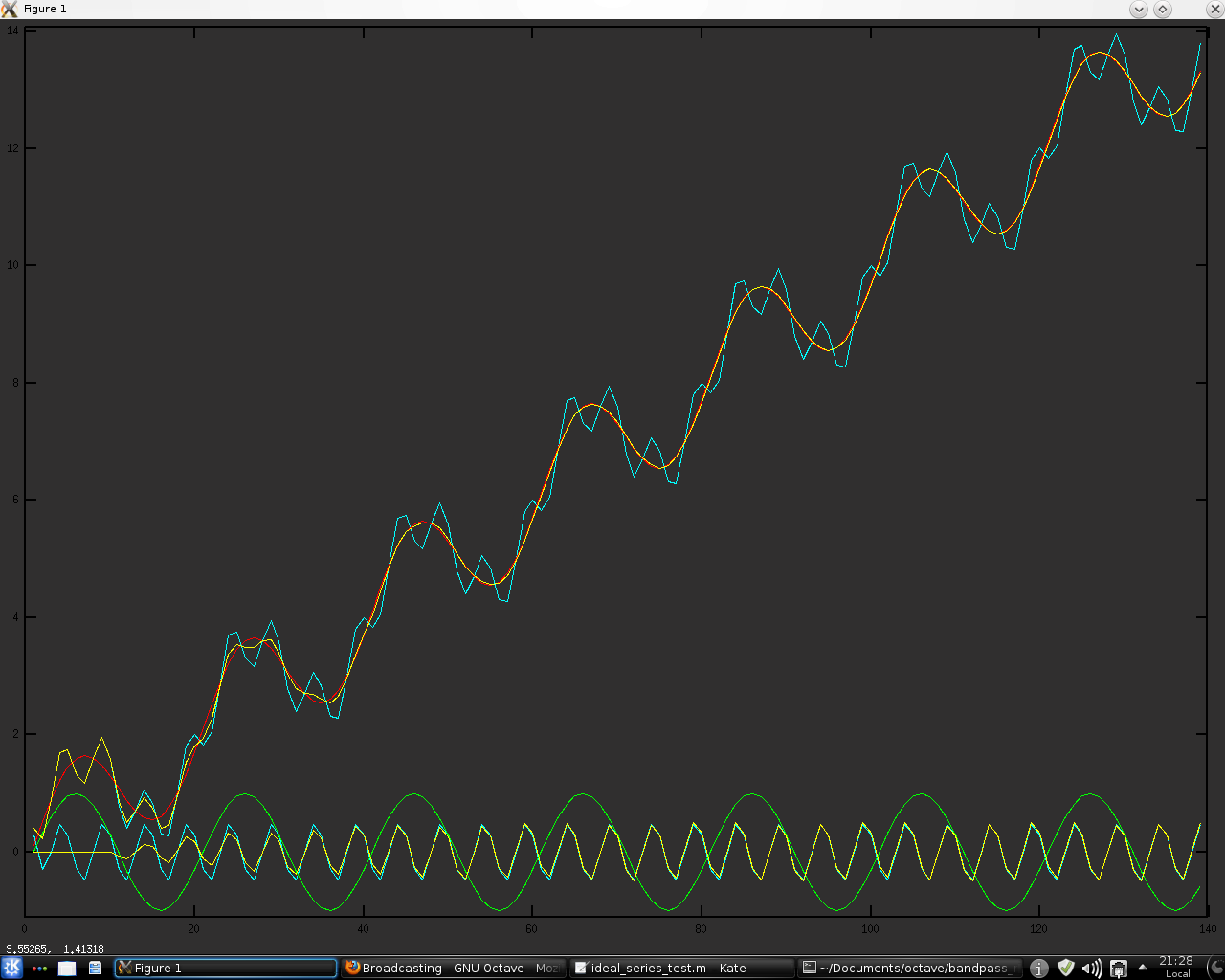

The following plot shows the original indicator in black and the neural net version of it in blue

over the data shown in this plot of 10 minute bars of the EURUSD forex pair.The red indicator in the plot above is the 1 bar momentum of the neural net indicator plot.

To judge the quality of this indicator I used the entropy measure (measured over 14,000+ 10 minute bars of EURUSD), the results of which are shown next.

entropy_indicator_original = 0.9485

entropy_indicator_nn_output = 0.9933An entropy reading of 0.9933 is probably as good as any trading indicator could hope to achieve (a perfect reading is 1.0) and so the next thing was to quickly back test the indicator performance. Based on the logic of the indicator the obvious long (short) signals are being above (below) the zeroline, or equivalently the sign of the indicator, and for good measure I also tested the sign of the momentum and some simple moving averages thereof.

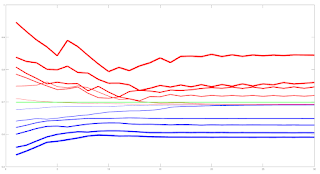

The following plot shows the equity curves of this quick test where it is visually clear that the blue equity curves are "the best" when plotted in relation to the black "buy and hold" equivalent equity curve. These represent the equity curves of a 3 bar simple moving average of the 1 bar momentum of both the original formulation of the indicator and the neural net implementation. I would point out that these equity curves represent the theoretical equity resulting from trading the London session after 9:00am BST and stopping at 7:00am EST (usually about noon BST) and then starting trading again at 9:00am EST until 17:00pm EST. This schedule avoids the opening sessions (7:00 to 9:00am) in both London and New York because, from my observations of many OHLC charts such as shown above, there are frequently wild swings where price is being pushed to significant points such as volume profile clusters, accumulations of buy/sell orders etc. and in my opinion no indicator can be expected to perform well in that sort of environment. Also avoided are the hours prior to 7:00am BST, i.e. the Asian session or overnight session.

Although these equity curves might not, at first glance, be that impressive, especially as they do not account for trading costs etc. my intent on doing these tests was to determine the configuration of a final "decision" output layer to be added to the neural net implementation of the indicator. A 3 bar simple moving average of the 1 bar momentum implies the necessity to include 4 consecutive readings of the indicator output as input to a final " decision" layer. The following simple, hand-drawn sketch shows what I mean:A discussion of this will be the subject of my next post.