clear all ;

1;

function x_n = ekf_sine_f( x )

% Dynamical model function for the sine signal.

% Takes the input vector of phase, angular frequency in radians and

% amplitude and calculates the next values of the states given the

% current vector.

% transition matrix

A = [ 1 1 0 ; % phase in Rads

0 1 0 ; % angular_freq_rads

0 0 1 ] ; % amplitude

x_n = A * x ;

x_n( 1 ) = mod( x_n( 1 ) , 2 * pi ) ;

endfunction

function Y = ekf_sine_h( x )

% Measurement model function for the sine signal.

% Takes the input vector of phase, angular frequency and amplitude and

% calculates the current value of the sine given the input vector.

f = x( 1 , : ) ; % phase in radians

a = x( 3 , : ) ; % amplitude

Y = a .* sin( f ) ; % the sine

endfunction

function [ z , A ] = jacobian_transition( fun , x )

z = fun( x ) ;

A = [ 1 1 0 ;

0 1 0 ;

0 0 1 ] ;

endfunction

function [ z , A ] = jacobian_measurement( fun , x )

z = fun( x ) ;

A = [ x( 3 ) * cos( x( 1 ) ) 0 sin( x( 1 ) ) ] ;

endfunction

%%%%%%%%%%%%%%%%%%% Generate a sine wave %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

pkg load statistics ;

load all_snr ;

load all_hmm_periods_daily ;

N = 250 ; % total dynamic steps i.e.length of data to generate

[ gen_period , gen_states ] = hmmgenerate( N , transprobest , outprobest ) ;

gen_period = gen_period .+ hmm_min_period_add ;

angular_freq_rads = ( 2 * pi ) ./ gen_period ;

real_phase = cumsum( angular_freq_rads ) ; real_phase = mod( real_phase , 2 * pi ) ;

gen_sine = sin( real_phase ) ;

noise_val = mean( [ all_snr(:,1) ; all_snr(:,2) ] ) ;

my_sine_noisy = awgn( gen_sine , noise_val ) ;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

f = @ekf_sine_f ;

h = @ekf_sine_h ;

n = 3 ; % number of states - phase, angular frequency and amplitude

Q = eye( n ) ; % matrix for covariance of process

Q( 1 , 1 ) = var( real_phase ) ;

Q( 2 , 2 ) = var( angular_freq_rads ) ;

Q( 3 , 3 ) = var( sqrt(2) * sqrt( mean( my_sine_noisy.^2 ) ) ) ;

Q = 1 .* Q ; % scale the covariance matrix, a tunable factor ?

R = var( gen_sine .- my_sine_noisy ) ; % measurement noise variance

% initial state

s = [ real_phase( 6 ) ; % phase ( Rads )

mean( angular_freq_rads( 1 : 20 ) ) ; % angular_freq_rads

sqrt(2) * sqrt( mean( my_sine_noisy( 1 : 20 ).^2 ) ) ] ; % amplitude

P = eye( n ) ; % initial state covariance

% allocate memory

xV = zeros( n , N ) ; % record estmates of states

sV = ones( 1 , N ) ; % real amplitudes

pV1 = zeros( n , N ) ; % projection measurments

pV2 = zeros( n , N ) ; % projection measurments

pV3 = zeros( n , N ) ; % projection measurments

for k = 7 : N

z = my_sine_noisy( k ) ; % noisy measurement of sine

% do ekf

[ x1 , A ] = jacobian_transition( f , s ) ; % nonlinear update and linearisation at current state

P = A * P * A' + Q ; % partial update

[ z1 , H ] = jacobian_measurement( h , x1 ) ; % nonlinear measurement and linearisation

P12 = P * H' ; % cross covariance

R = chol( H * P12 + R ) ; % Cholesky factorisation

U = P12 / R ; % K = U / R' ; Faster because of back substitution

s = x1 + U * ( R' \ ( z - z1 ) ) ; % Back substitution to get state update

P = P - U * U' ; % Covariance update, U * U' = P12 / R / R' * P12' = K * P12

xV( : , k ) = s ; % save estimated updated states

pV1( : , k ) = s ; pV1( 1 , k ) = pV1( 1 , k ) + pV1( 2 , k ) ; % for plotting

pV2( : , k ) = s ; pV2( 1 , k ) = pV2( 1 , k ) + 2*pV2( 2 , k ) ; % for plotting

pV3( : , k ) = s ; pV3( 1 , k ) = pV3( 1 , k ) + 3*pV3( 2 , k ) ; % for plotting

endfor

% Plotting

figure(1) ; subplot(3,1,1) ; plot( real_phase , 'k', 'linewidth' , 2 , xV( 1 , : ) , 'r' , 'linewidth' , 2 ) ; grid minor on ;

title( 'Phase State' ) ; legend( 'Actual Phase State' , 'Estimated Phase Sate' ) ; ylabel( 'Radians' ) ;

figure(1) ; subplot(3,1,2) ; plot(angular_freq_rads, 'k', 'linewidth' , 2 , xV(2,:), 'r' , 'linewidth' , 2 ) ; grid minor on ;

title( 'Angular Frequency State in Radians' ) ; legend( 'Actual Angular Frequency' , 'Estimated Angular Frequency' ) ;

figure(1) ; subplot(3,1,3) ; plot( sV , 'k', 'linewidth' , 1 , xV(3,:), 'r' , 'linewidth' , 2 ) ; grid minor on ;

title( 'Amplitude State' ) ; legend( 'Actual Amplitude' , 'Estimated Amplitude' ) ;

sine_est = h( xV ) ;

sine_1 = h( pV1 ) ; sine_1 = shift( sine_1 , 1 ) ;

sine_2 = h( pV2 ) ; sine_2 = shift( sine_2 , 2 ) ;

sine_3 = h( pV3 ) ; sine_3 = shift( sine_3 , 3 ) ;

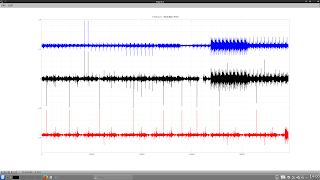

figure(2) ; plot( gen_sine , 'k' , 'linewidth' , 2 , my_sine_noisy , '*b' , 'linewidth' , 2 , sine_est , 'r' , 'linewidth' , 2 ) ;

title( 'Underlying and its Estimate' ) ; legend( 'Real Underlying Sine' , 'Noisy Measured Sine' , 'EKF Estimate of Real Underlying Sine' ) ;

figure(3) ; plot( gen_sine , 'k' , 'linewidth' , 2 , sine_1 , 'r' , 'linewidth' , 2 , sine_2 , 'g' , 'linewidth' , 2 , sine_3 , 'b' , 'linewidth' , 2 ) ;

title( 'Plot of the actual sine wave vs projected estimated sines' ) ;

legend( 'Actual' , 'EKF Projected 1' , 'EKF Projected 2' , 'EKF Projected 3' ) ;the filtered estimate and shifted, future projections using the current estimated states The code is well commented so I won't talk further about it in this post as this is part of ongoing work. A fuller discussion in due course.